Introduction

Wintellect's open source Power Collections library describes itself as "a set of classes and methods that add new generic collection types and algorithms to the .NET Framework."

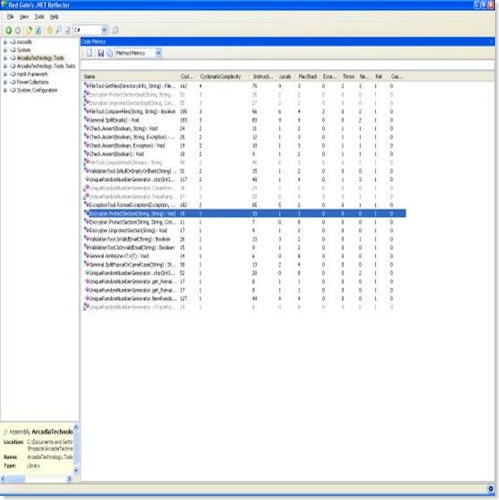

Power Collections was developed originally by a member of the C# 1.0 design team. It is designed to be used with .NET 2.0 generics and provides a library of data structures and algorithms somewhat similar to the C++ Standard Template Library (STL). With the advent of .NET 3.5 and LINQ the algorithms part of the mix is perhaps of less value than it was, since I suspect many of them could be implemented more "fluently" using LINQ. However, even now there are some data structures present in Power Collections that are not present in the .NET Base Class Library (BCL).

The library itself is well-documented and the source includes XML code comments (so you can see class and method documentation via IntelliSense) and an accompanying set of unit tests. It is generally easy to use in that it fits seamlessly with .NET's existing generics library.

Data Structures

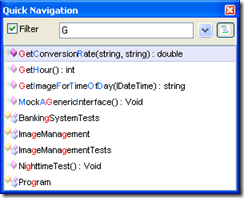

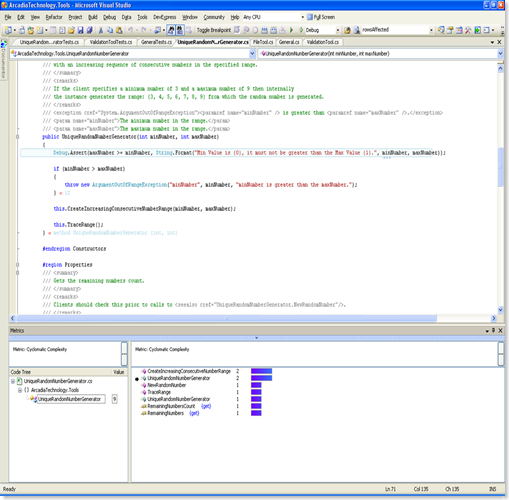

A while ago I wrote a unique random number generator class. What does "unique" mean? Given a collection of positive integers that can contain duplicates I wanted to select a number from a random position in the range such that once selected that position can never be selected again.

So the generator class works such that once a number has been generated for an instance the number at that position in the range will not be generated again. Subsequent generations will eventually exhaust the entries in the range. An example will clarify this.

This range has duplicates.

{ 1, 2, 3, 4, 3, 6, 4, 8, 17, 42, 6 }

If the first 3 is selected at random then the generator can only subsequently select the second 3. If a random number were generated eleven times we might get this result: 42 6 3 4 2 4 6 17 3 8 1 .

This range is an increasing sequence of consecutive numbers.

{ 1, 2, 3, 4, 5, 6, 7, 8 }

If a random number were generated eight times we might get this result: 4 2 5 1 8 7 3 6

I also created some unit tests for the class. One such test was to take a collection with duplicates and run the generator until it exhausted all the entries in the range and then check that the source and generated collections are equal.

A collection containing duplicates is a Bag (or Multiset) data structure. The .NET Base Class Library does not have such a data structure but the Power Collections library does. So it was convenient to make use of this in my unit tests.

I was then able to set up tests such as this:

int[] numbers = new int[] { 1, 2, 3, 4, 3, 6, 4, 8, 17, 42, 6 };

Bag<int> expected = new Bag<int>(numbers);

Bag<int> actual = new Bag<int>();

// Generate contents of actual

// ...

Assert.IsTrue(expected.IsEqualTo(actual));

I often have a need to pass around just a pair of values that have no behaviour associated with them. A struct would be suitable but rather than define a new type I can use Power Collection's Pair data structure. This is defined as Pair<TFirst,TSecond>. Power Collections also has a Triple defined as Triple<TFirst,TSecond,TThird>.

In .NET 4.0 a new Tuple type has been defined which serves the same purpose as Pair and Triple but until then we can use the Power Collections implementations.

Here's how we might use Pair:

// Display print resolution and drops per dot for current selection

Pair<string, string> pair =

SplitPrintResolutionAndDropsPerDot(text);

string printResolution = pair.First;

string dropsPerDot = pair.Second;

txtPrintModeResolution.Text = printResolution;

txtPrintModeDPD.Text = dropsPerDot;

Another useful data structure is the MultiDictionary (also referred to as a Multimap). This is missing from the .NET BCL prior to .NET 3.5 (.NET 3.5 has the Lookup class in the System.Linq namespace). The Power Collections library defines the class thus:

The MultiDictionary class associates values with a key. Unlike a Dictionary, each key can have multiple values associated with it. When indexing a MultiDictionary, instead of a single value associated with a key, you retrieve an enumeration of values.

Here's an example:

// Initialise

bool allowDuplicateValues = true;

MultiDictionary<int, int> dict =

newMultiDictionary<int, int>(allowDuplicateValues);

dict.AddMany(1, new int[] { 4, 4, 6});

dict.AddMany(2, new int[] { 40, 3, 6});

dict.AddMany(3, new int[] { 42, 15, 8});

// Print

dict.ForEach(

delegate(KeyValuePair<int, ICollection<int>> kvp)

{

Console.WriteLine("Key: "+ kvp.Key);

Console.Write("Values: ");

foreach (int var inkvp.Value)

{

Console.Write(var + " ");

}

Console.Write(Environment.NewLine);

});

Algorithms

The Power Collections documentation describes the Algorithms class thus:

Algorithms contains a number of static methods that implement algorithms that work on collections. Most of the methods deal with the standard generic collection interfaces such as IEnumerable<T>, ICollection<T> and IList<T>.

Much of what is provided here can now be implemented more "fluently" using LINQ. It also includes some methods that are already available in .NET 2.0. However, Power Collections was written while .NET 2.0 was being developed and as such some methods were provided before they were added to the completed .NET 2.0 BCL.

A very useful algorithm is TypedAs defined as follows:

Given a non-generic IEnumerable interface, wrap a generic IEnumerable<T> interface around it. The generic interface will enumerate the same objects as the underlying non-generic collection, but can be used in places that require a generic interface. The underlying non-generic collection must contain only items that are of type T or a type derived from it. This method is useful when interfacing older, non-generic collections to newer code that uses generic interfaces.

This is especially useful in UI code when dealing with collections defined as IEnumerable or ICollection where you want to apply some generics or Power Collections algorithm to them. Here's an example that is not a UI one but illustrates the idea:

AuthorizationRuleCollection rulesCol =

fileSecurity.GetAccessRules(

includeExplicit,

includeInherited,

type

);

List<AuthorizationRule> rules =

new List<AuthorizationRule>(

Algorithms.TypedAs<AuthorizationRule>(rulesCol)

);

AuthorizationRule rule =

rules.Find(delegate(AuthorizationRule match)

{

return match.IdentityReference.Value.Contains(AccountName);

});

By converting AuthorizationRuleCollecton to List<AuthorizationRule> via TypedAs we are able to apply List.Find using an anonymous delegate.

In .NET 3.5 we can now do this via the IEnumerable.Cast (and similar ICollection and IList) extension method.

Set Operations

PowerCollections has a Set class that was missing from .NET 2.0 but there is now one in .NET 3.5 (HashSet). However, Power Collections also has some set methods as generic algorithms that can be applied to IEnumerable<T> collections. Here is an example:

// Get the authorised roles for this page

string[] authorisedRoles =

Array.ConvertAll<string, string>(

GetAuthorisedRoles(configPath),

StringHelper.ToLowerAndTrim

);

// Get all roles for the user

string[] userRoles =

Array.ConvertAll<string, string>(

Roles.GetRolesForUser(),

StringHelper.ToLowerAndTrim

);

// Get the subset of the user's roles that are

// authorised for this page

List<string> userAuthorisedRoles =

new List<string>(

Algorithms.SetIntersection(authorisedRoles, userRoles)

);

The idea here is that there is a set of authorised roles for the page, let's say A, B, C, D, E. The user may have a set of roles C, E, F, G. They can access the page if one or more of their roles matches an authorised role for the page, in this case C and E. This is a set intersection operation.

The Power Collections library is very easy to use and is well-documented and tested. More developers should have it in their toolbox.